While graph neural networks (GNNs) have long been the preferred choice for graph-based learning tasks, they often rely on intricate, custom-designed message-passing mechanisms.

However, a novel approach has emerged that offers comparable or superior performance with a more streamlined methodology.

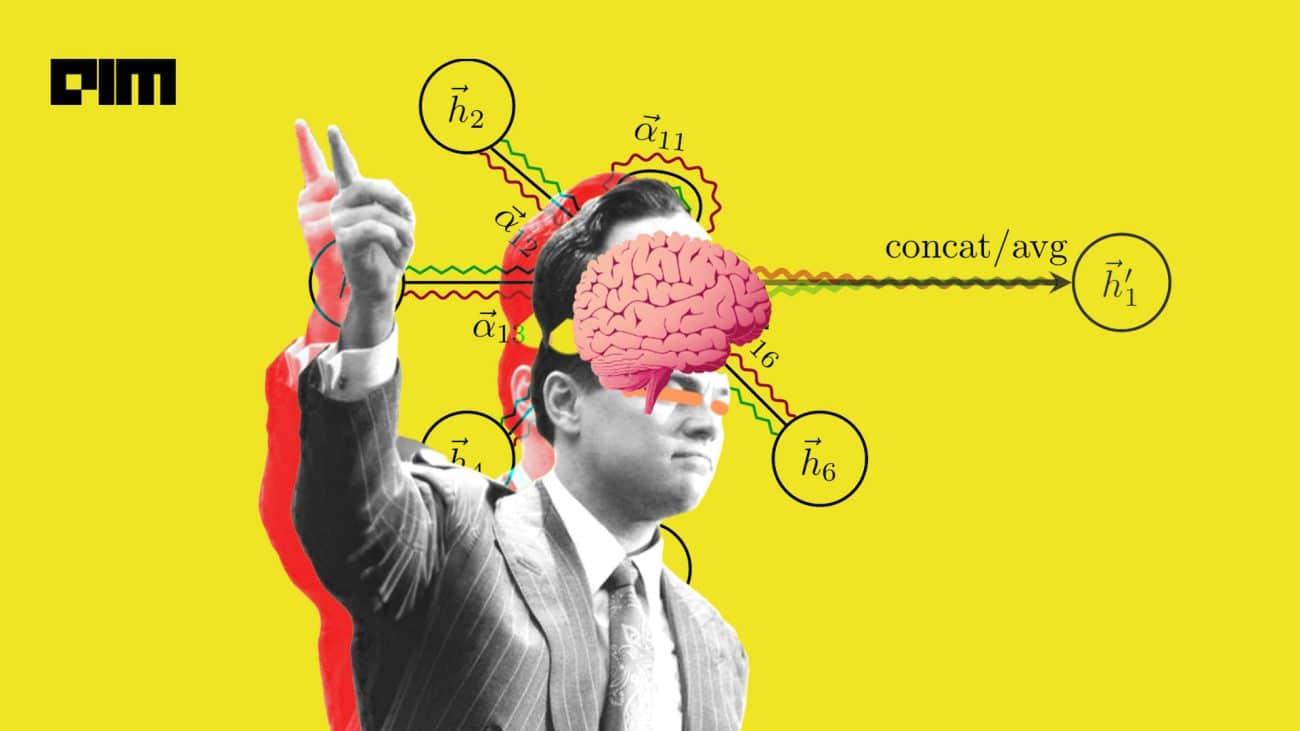

Enter masked attention for graphs (MAG), an innovative technique that reimagines graph representation. MAG conceptualises graphs as collections of nodes or edges, maintaining their interconnectedness through clever masking of the attention weight matrix.

This simple solution has proven remarkably effective, surpassing not only robust GNN benchmarks but also more sophisticated attention-based approaches across a diverse array of over 55 node and graph-level challenges.

The simplicity of MAG, which relies only on attention without any message passing, is a key finding. This suggests that the carefully designed message passing operators in GNNs can be effectively replaced by attention mechanisms. MAG's superior performance across diverse tasks… pic.twitter.com/jWxdx0V52L

— BensenHsu (@BensenHsu) July 24, 2024

Overcoming Traditional Problems

Junaid Ahmed, a software engineer, mentioned in a recent post on LinkedIn that there are certain limitations of transitional GGNs, including complexity in design, scalability issues, and performance variability, and they can be solved through MAG.

Apparently, MAG does not use any positional encodings, which is in contrast to current trends for GNNs and Transformers. It also does not require sophisticated learning rate schedulers or optimisers.

A professor of Beijing University of Posts and Telecommunications who goes by fly51fly on X mentioned MAG scales sub-linearly in memory and time with graph size. “It also enables effective transfer learning through pre-training and fine-tuning,” he said.

Another research paper titled ‘Masked Graph Transformer for Large-Scale Recommendation’ proposes using a Masked Graph Transformer (MGFormer) architecture for efficiently capturing all-pair interactions among nodes in large-scale recommendation systems.

The research paper further mentioned that the MGFormer outperforms GNN-based models, especially for lower-degree nodes, indicating its proficiency in capturing long-range dependencies for sparse recommendations.

Even with a single attention layer, the MGFormer achieves superior performance compared to baselines.

Expanding the Horizons of Graphs via Masking

In the field of cybersecurity, the cybersecurity entity alignment via masked graph attention networks (CEAM) model leverages an asymmetric masked aggregation mechanism to address the unique challenges of aligning security entities across different data sources.

By selectively propagating specific attributes between entities, CEAM significantly outperforms state-of-the-art entity alignment methods on cybersecurity-domain datasets.

Masking has also found its way into video event recognition through the masked feature modelling (MFM) approach.

MFM utilises a pre-trained visual tokeniser to reconstruct masked features of objects within a video, enabling the unsupervised pre-training of a graph attention network (GAT) block.

When incorporated into a state-of-the-art bottom-up supervised video-event recognition architecture, the pre-trained GAT block improves the model’s starting point and overall accuracy.

In the domain of temporal knowledge graph reasoning, the Attention Masking-based Contrastive Event Network (AMCEN) employs historical and non-historical attention mask vectors to control the attention bias towards historical and non-historical entities.

By separating the exploration of these entity types, AMCEN alleviates the imbalance between new and recurring events in datasets, leading to more accurate predictions of future events.

Overall, the emergence of MAG and related masked attention-based techniques represents an exciting new direction in graph representation learning, offering both simplified architectures and state-of-the-art performance across a wide range of applications like cybersecurity, reducing biases and video event recognition.