|

Listen to this story

|

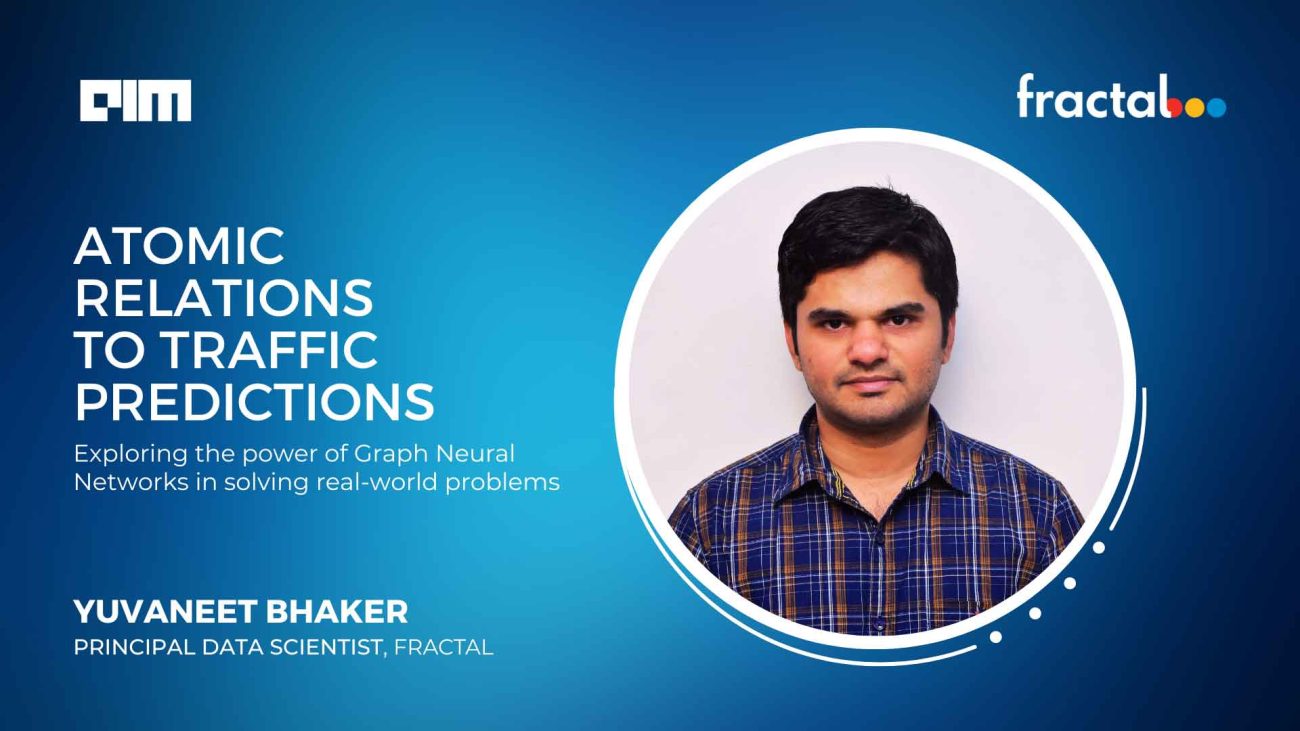

Graph Neural Networks, or GNNs, have grown increasingly popular, having found extensive usage in a range of different projects. A type of neural network, GNNs can process any data presented as a graph. Of late, GNNs have become vital in scientific discovery, building physics simulations, fake news detection, traffic prediction and recommendation systems.

Analytics India Magazine caught up with Yuvaneet Bhaker, Principal Data Scientist at Fractal, to understand more about the scope of GNNs in industries and their relevance in the future.

AIM: How does Fractal use GNNs?

Yuvaneet: We use it for a variety of domain-specific problems — for example, piracy detection in the media and telecom industry. Applications hosting content like live sports, web series, etc., run at risk of significant reputation and revenue loss due to piracy events. It can potentially harm organisations, content creators and the industry. Our approach is to identify suspicious accounts with unusual behaviour. We leverage unsupervised techniques and Graphs networks to identify pirate accounts. Typically, pirate accounts tend to share devices and IP addresses. We overlay this information with the duration of the session, usage of VPN, and geography information to find improbable browsing behaviour. With the help of driver analysis, we classify such behaviour into normal vs pirate (unauthorised) behaviour. Finally, we use Graph embedding of pirate accounts to identify similar accounts to pre-empt and prevent further piracy events.

AIM: What are the major applications of GNNs, and what other areas can they be used in?

Yuvaneet: Let me put it this way, wherever there are relationships and interactions between entities, we can use graph data networks. For example, people interacting on a social media platform or colleagues interacting in an organisation. Another example would be payment transaction data for a corporate bank. Here the entities include customers and counterparties. These entities interact with each other via a transaction that can be defined by product, currency, amount, cross border etc. This network of buyers and suppliers can be used to develop a counterparty persona, generate prospect opportunities and identify potential product offerings. Essentially, in any area where there are interactions and relationships, GNNs can be used for classification analysis.

If we can define the interaction between atoms in a given molecule via a chemical bond, we can use GNNs to understand their property. For example, it will help us predict their hydrophobic vs hydrophilic nature, how they would potentially smell, and how they would react with metals or alloys.

Another way to look at the application of GNNs is technical implementation; there are various ways of implementing GNNs to solve problems like node classification, subgraph classification, and edge classification. Again, the goal is to identify and map our business problem in a manner that GNNs can be leveraged.

Additionally, another useful approach worth exploring is graph embeddings. They utilise two types of information – one being the characteristic of a node (features) and the other being information propagated due to interaction with its neighbours and connecting edges.

AIM: GNNs are growing in popularity. How do they compete with CNNs, possibly the most widely used neural network?

Yuvaneet: CNNs and GNNs have reasonable exclusivity regarding the problems they deal with.

Let’s take an example of a picture of a map. A CNN can be used to mark a railway station and trains/track that connects a station; it can also detect lakes, mountains, and forests on the way. This is done through object detection and segmentation. I will use GNNs on top of CNN’s outcome to see how stations (nodes) and track traffic (edges) behave. GNNs can predict congestion at any given railway station or estimate delays for a given train.

CNNs have been in existence for a longer time and have advantages in terms of performance and speed, while GNNs being newer algorithms, open new doors. GNNs use convolution operations inspired by CNNs (called Graph Convolution Networks) and other established methods like batch normalisation and dropouts, which helps with the effective training of GNNs network. They have distinct use cases. I don’t see them competing, but we can get more value by employing them on suitable datasets and problem statements.

AIM: A lot of computing is required to adopt GNNs on a large scale, making them harder to train. How do you tackle that?

Yuvaneet: Scaling in Graph’s context means two things. First is the ability to learn from the large network; second is computational complexity (time and memory).

The challenge with graphs is that they’re not as quickly interpretable as some other datasets, like images or tabular data. However, we can design our Graph network with specific tasks in mind to solve large-scale issues. This includes a definition of nodes, edges and a selection of features with respect to the problem being solved.

It is equally important to understand graphs through summarization. We are developing tools to better summarise Graph datasets, for example, the distribution of neighbours and edges and feature curation techniques. Additionally, we employ dimensionality reduction techniques by leveraging embeddings for nodes and edges.

While training GNNs, we can use batch normalisation, dropouts, sampling, faster activations like ReLU, historical node embedding, pre-computation of feature aggregation, selective use of CPU and GPUs, and convolution-based architectures for faster and better performance.

In future, we anticipate improvement by leveraging the sparse nature of Graphs.

AIM: What is the oft-discussed over-smoothing problem with GNNs?

Yuvaneet: We learn a node’s representation through the propagation of information. This information travels from neighbouring nodes. As the network gets deeper, node representation tends to get similar; this is called smoothing. However, if overdone, it starts harming our objective, and we call it over-smoothing.

For example, imagine a node represented through some embedding. Such embeddings are randomly initialised and iteratively updated based on information from neighbouring nodes and edges. When the network is shallow, embedding updates are localised, and when it is deeper, embeddings cover a larger span. However, in practice, we observe all nodes tend to have the same embedding (entire graph in their span), thus losing discriminatory power and rendering them ineffective for the classification task.

The first step to mitigate over-smoothing is understanding the issue by quantification through measures like the information-to-signal ratio using metrics such as MADGap. The next step is to use sampling, regularisation, normalisation, or techniques like residual links with identity weights.