Graph neural processing is one of the hot topics of research in the area of data science and machine learning because of their capabilities of learning through graph data and providing more accurate results. Various researchers have developed various state of the art graph neural networks. A graph attention network is also a type of graph neural network that applies an attention mechanism to itself. In this article, we are going to discuss the graph attention network. The major points to be discussed in the article are listed below.

Table of content

- What is a graph attention network?

- Graph neural network (GNN)

- Attention layer

- Combination of GNN and attention layer

- The benefit of adding attention to GNN

- The architecture of graph attention network

- Advantages of the graph attention network

Let’s start by understanding a graph attention network

What is a graph attention network?

As the name suggests, the graph attention network is a combination of a graph neural network and an attention layer. To understand graph attention networks we are required to understand what is an attention layer and graph-neural networks first. So this section can be divided into two subsections. First, we will look at the basic understanding of the graph neural network and attention layer then we will focus on the combination of both. Let’s take a look at the graph neural network.

Graph neural network (GNN)

In one of our articles, we can see an implementation of a graph neural network and we have also discussed that graph neural networks are the networks that are capable of dealing and working with graph-structured information or data. There are various benefits of using graph-structured data in our projects such as these kinds of structures hold the information in the form of vertices and nodes of the graph and it becomes very easy for the neural networks to understand and learn data points present in the graph or three-dimensional structure. Taking an example of data related to a classification problem can consist of labels in the form of nodes and the information in the form of vertices.

Most real-world problems have data that is very huge and consists of structural information in itself. Using a graph neural network can provide a state of the art performing model.

Attention layer

In one of our articles, we have discussed that the attention layer is a layer that enables us to design a neural network that can memorize the long sequences of information. Generally, we find the uses of such layers in the neural machine translation problems. A standard neural network works by encoding the sequential information in the form of compressed context vectors.

If an attention layer is included in the network then the network will be forced to work by creating a shortcut between the input and the context vector. The attention layer will help change the weights of the shortcut connection for every output. Since the connection between input and context vector provides the context vector to access all input values, the problem of the standard neural network forgetting the long sequences gets resolved.

In simple words, we can say that the implementation of the attention layer in neural networks helps provide attention to the important information from the data instead of focusing on the whole data. This way we can make our neural network more reliable and stick to the only important information.

Here we can see that till now we were applying the attention layer to the neural network but the article focuses on applying the attention layer or mechanism to a graph neural network. Let’s see what applying attention to graph neural networks will mean.

Combination of GNN and attention layer

In the above points, we have discussed that a graph neural network is a better way to deal with data that has long structural information and an attention layer is a mechanism that helps in extracting only useful information from long or big data. Both of these things can be combined then we can call it a graph attention network.

A graph attention network can also be explained as leveraging the attention mechanism in the graph neural networks so that we can address some of the shortcomings of the graph neural networks. Let’s take an example of graph convolutional networks that are generally used in solving problems related to sequential information present in the data.

These graph networks apply stacked layers in which nodes can consist of features of the neighbour nodes. Applying attention to these nodes makes the whole network specify different weights to the different nodes present in the neighbor only. By this method, we make the network capable of working with only those information of the nodes that are useful.

One thing that matters here the most is understanding the behavior and importance of the neighbor node on the outcome. These are the methods that can be applied to inductive as well as transductive problems. We can also say that applying attention to the graph neural network is the way to advance it or make it better. Let’s see what gets improved by applying the attention mechanism to the graph neural network.

Are you looking for a complete repository of Python libraries used in data science, check out here.

Benefit of adding attention to GNN

This section will take an example of a graph convolutional network as our GNN. As of now we know that graph neural networks are good at classifying nodes from the graph-structured data. In many of the problems, one shortcoming we may find is that graph convolutional networks are harming the generalizability of graph-structured data because of the aggregation of information of graph structure. Applying a graph attention network to those problems changes the way of aggregation of information. The GCN provides the sum of neighbour node features as follows:

hi(l+1) = (jN(i)(1/cij)w(l)hj(l))

Where,

N(i) = set of the connected nodes

cij = normalization on graph structure

= activation function

w(l)= weight matrix

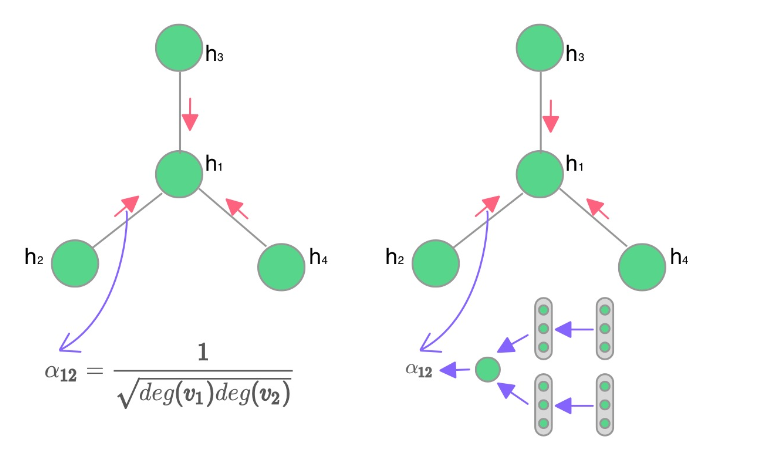

In a similar sum, the attention can provide a statically normalized convolution operation. The below figure is a representation of the difference between standard GCN and GAT.

By the above, we can say that by applying attention to the network, more important nodes are getting higher weights during the neighbourhood aggregation.

The architecture of graph attention network

In this section, we will look at the architecture that we can use to build a graph attention network. generally, we find that such networks hold the layers in the network in a stacked way. We can understand the architecture of the network by understanding the work of three main layers.

Input layer: The input layer can be designed as such it is made up of using a set of node features and should be capable of producing a new set of node features as the output. These layers can also be capable of transforming the input node features into learnable linear features.

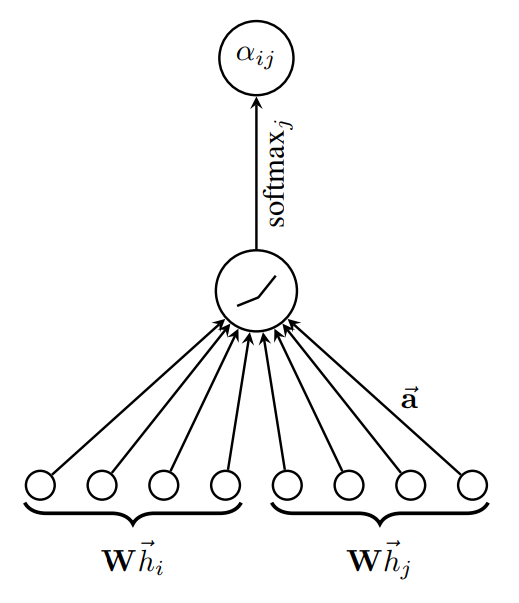

Attention layer: After transforming the features an attention layer can be applied in the network where the work of the attention layer can be parameterized by the output of the input layer using a weight matrix. By applying this weight matrix to every node we can apply self-attention to the nodes. Mechanically, we can imply a single-layer feed-forward neural network as our attention layer that can give us a normalized attention coefficient.

The above image is a representation of the attention layer applied to the GCN.

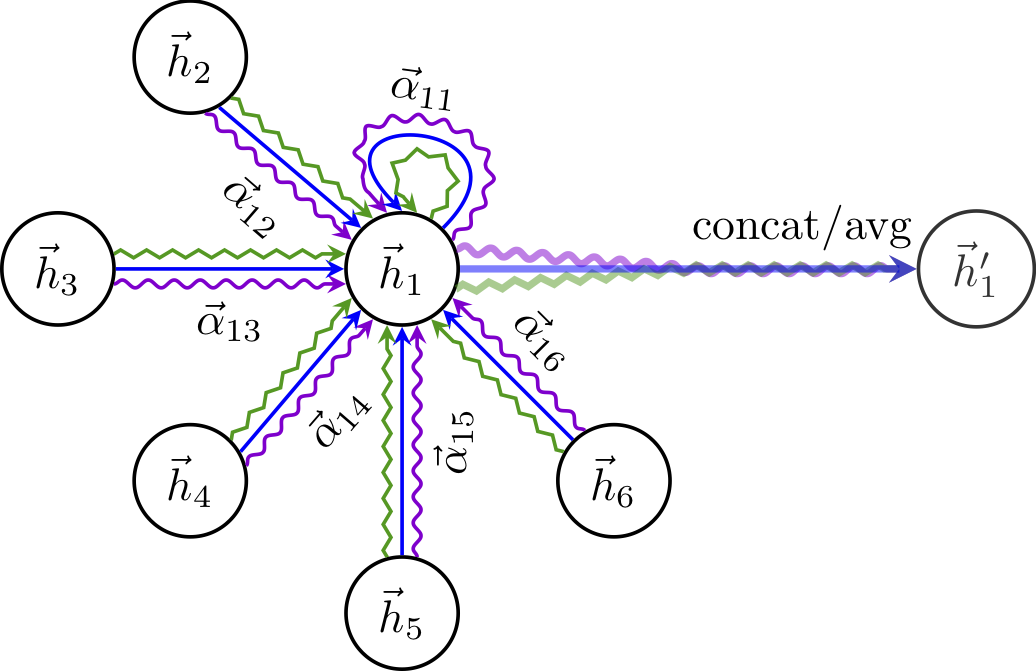

Output layer: after obtaining the normalized attention coefficient we can use them to compute the set of features corresponding to the coefficient and serve them as final features from the network. To stabilize the process of attention we can use multi-head attention so that various independent attention can be applied to perform transformation and concatenation of output features.

The above image is a representation of the applied multi-head attention to stabilize the process of self-attention that computes the attention and concatenates the aggregated features.

Advantages of the graph attention network

There are various benefits of graph attention networks. Some of them are as follows:

- Since we are applying the attention in the graph structures, we can say that the attention mechanism can work in a parallel way that makes the computation of the graph attention network highly efficient.

- Applying attention to any setting makes the capacity and accuracy of the model very high because the models need to learn only important data or we can say less amount of data.

- If the attention mechanism is applied in a shared manner then the graph network can be directly used with inductive learning.

- Analysis of the learned weights after applying attention to them can make the process of the network more interpretable.

Final words

In the article, we have discussed the graph attention network which is a combination of the graph neural network and the attention layer. Applying attention to the GNN can provide improvements in the results and also has several benefits that have been discussed in this article.