|

Listen to this story

|

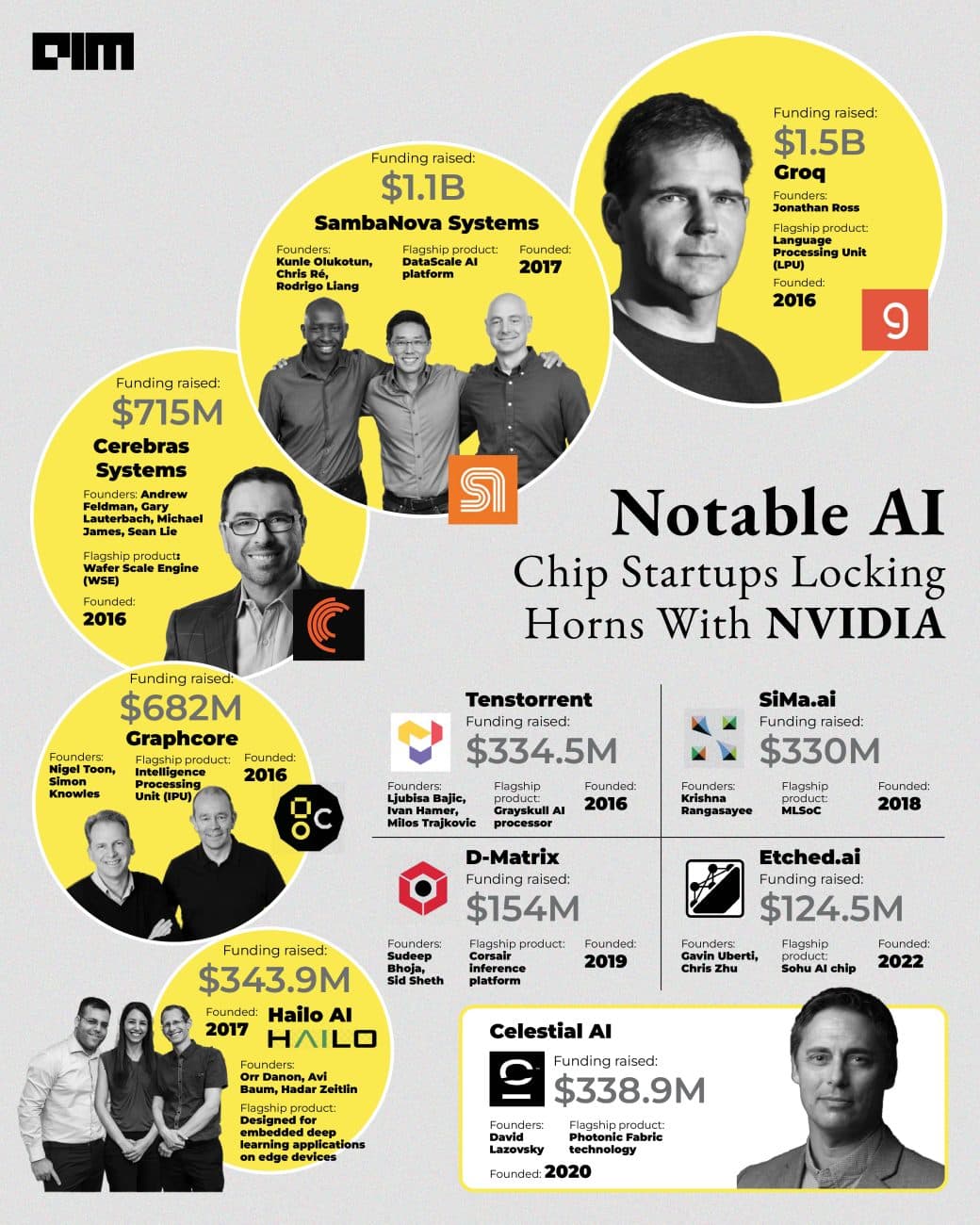

NVIDIA may reign as the king of GPUs, but competition is heating up. In recent years, a wave of startups has emerged, taking on the Jensen Huang-led giant at its own game.

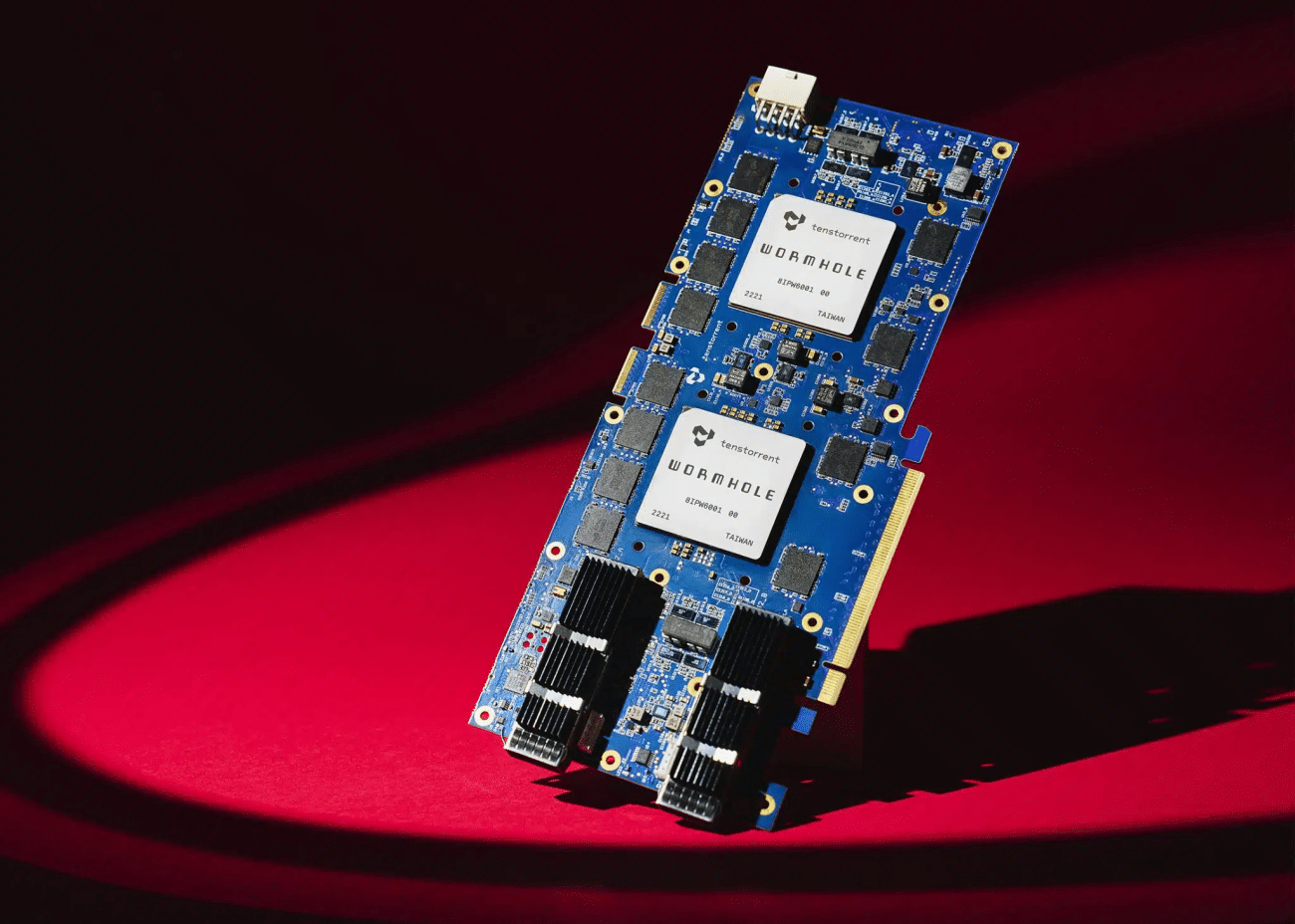

Tenstorrent, a startup led by Jim Keller, the lead architect of AMD K8 microarchitecture, is developing AI chips that the company claims perform better than NVIDIA’s GPUs.

“We have a very power-efficient compute, where we can put 32 engines in a box, the same size as NVIDIA puts eight. With our higher compute density and similar power envelope, we outperform NVIDIA by multiples in terms of performance, output per watt, and output per dollar,” Keith Witek, chief operating officer at Tenstorrent, told AIM.

(Wormhole by Tenstorrent)

NVIDIA’s chips used in the data centres need silicon interposers like HBM memory chips. Companies like Samsung and SK Hynix, along with NVIDIA, have also made millions selling these chips. However, Tenstorrent chips eliminate the need for these chips.

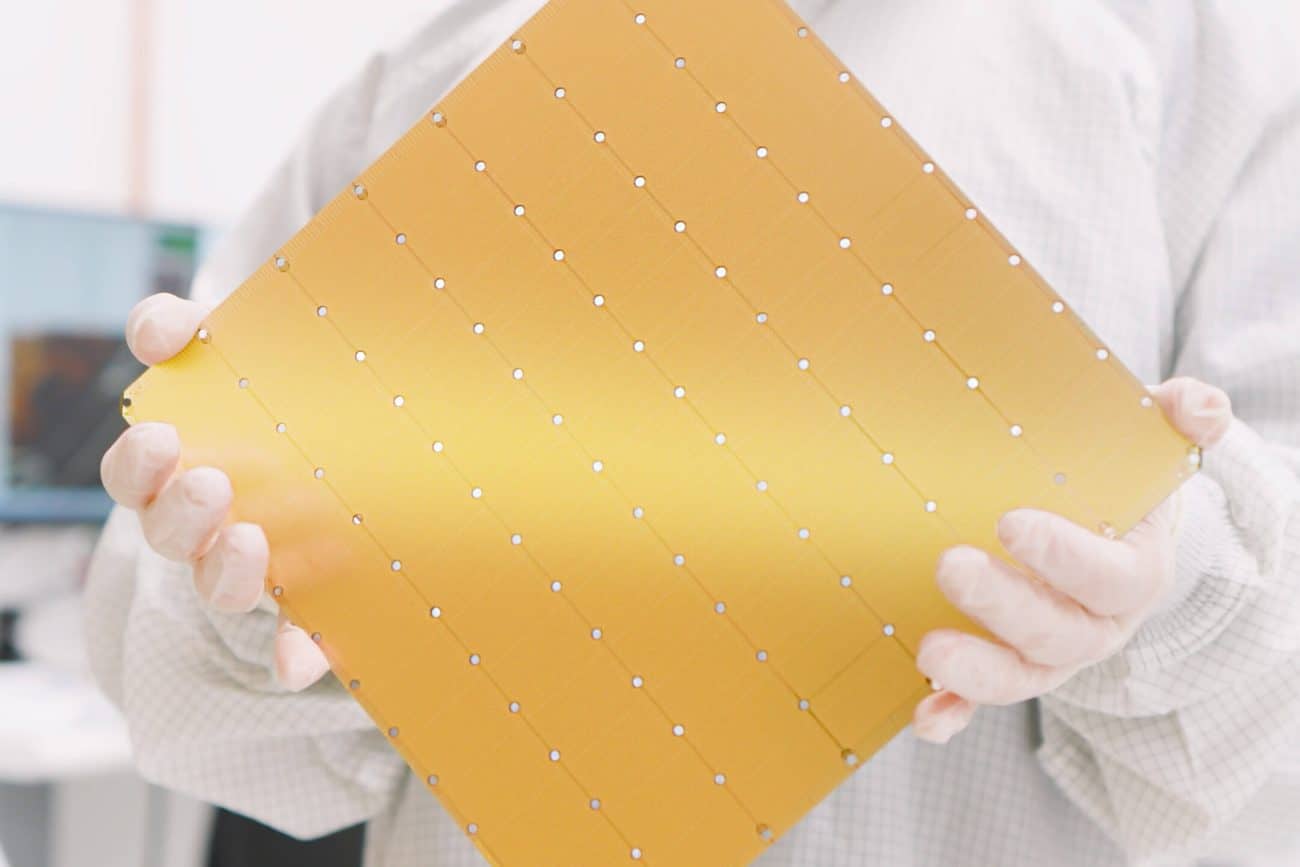

Similarly, Cerebras Systems, founded by Andrew Feldman in 2015, has developed chips to run generative AI workloads such as training models and inference. Their chip, WSE-3– is the world’s largest AI chip– with over 4 trillion transistors and 46225mm2 of silicon.

Check: Difference Between NVIDIA GPUs – H100 Vs A100

The startup claims its chips are 8x faster than NVIDIA DGX H100 and are designed specifically to train large models.

(World’s largest AI chip- WSE-3)

Startups Building for the Inference Market

There are startups developing chips designed specifically for inferencing. While NVIDIA’s GPUs are in great demand because they are instrumental in training AI models, for inference, they might not be the best tool available.

D-Matrix, a startup founded by Sid Sheth, is developing silicon which works best at inferencing tasks. Its flagship product Corsair is specifically designed for inferencing generative AI models (100 billion parameter or less) and is much more cost-effective, compared to GPUs.

“We believe that a majority of enterprises and individuals interested in inference will prefer to work with models up to 100 billion parameters. Deploying larger models becomes prohibitively expensive, making it less practical for most applications,” he told AIM.

Another startup that is locking horns with NVIDIA in this space is Groq, founded by Jonathan Ross in 2016. According to Ross, his product is 10 times faster, 10 times cheaper, and consumes 10 times less power.

Groq is designed to provide high performance for inference tasks, which are critical for deploying AI models in production environments.

Recently, another player, Cerebras, announced its Cerebras inference, which they claim is the fastest AI inference solution in the world. It delivers 1,800 tokens/sec for Llama3.1 8B and 450 tokens/sec for Llama3.1 70B, which is 20x faster than NVIDIA GPU-based hyperscale clouds.

Challengers in the Edge AI Market

While NVIDIA may have made its name and money by selling GPUs, over the years, it has also expanded in other segments, such as developing chips for humanoids, drones, and IoT devices.

SiMa.ai, a US-based startup with strong roots in India, is building chips which can run generative AI models on the embedded edge. Founded by Krishna Rangasayee in 2018, the startup takes NVIDIA as its biggest competitor.

Rangasayee believes multimodal AI is the future and the startup’s second-gen chip is designed to run generative AI models on the edge– on cars, robotic arms, humanoids, as well as drones.

“Multimodal is going to be everywhere, from every device to appliances, be it a robot or an AI PC. You will be able to converse, watch videos, parse inputs, just like you talk to a human being,” he told AIM.

Notably, SiMa.ai’s first chip, designed to run computer vision models on edge, beat NVIDIA on the ML Perf benchmarks. Another competitor of NVIDIA in this space is Hailo AI. It is building chips that run generative AI models on the edge.

Everyone Wants a Piece of the Pie

Notably, these startups are not seeking a niche within the semiconductor ecosystem. Instead, they are focused on delivering top-tier products and are unafraid to compete directly with NVIDIA.

They all want a piece of the pie and are already locking horns with NVIDIA.

D-Matrix, for instance, counts Microsoft, which is one of the AI model builders, as its customers. Sheth revealed that the company has customers in North America, Asia, and the Middle East and has signed a multi-million dollar contract with one of its customers. The point here is that Microsoft is one of NVIDIA’s biggest enterprise customers.

Cerebras also counts some of the top research and supercomputing labs as its customers. Riding on the success, the startup plans to go public this year.

Rangasayee previously told AIM that his startup is in talks with many robotics companies, startups building humanoids, public sector companies as well as some of the top automobile companies in the world.

They All Might Lose to CUDA

All these startups have made substantial progress and some are preparing to launch their products in the near future. While having advanced hardware is crucial, the real challenge for these companies will be competing against a monster – CUDA.

These startups, which position themselves as software companies which build their own hardware, have come up with their own software to make their hardware compatible with their customer’s applications.

For example, Tenstorrent’s open-source software stack Metalium is similar to CUDA but less cumbersome and more user-friendly. On Metalium, users can write algorithms and programme models directly to the hardware, bypassing layers of abstraction.

Interestingly, they have another one called BUDA, which represents the envisioned future utopia, according to Witek.

“Eventually, as compilers become more sophisticated and AI hardware stabilises, reaching a point where they can compile code with 90% efficiency, the need for hand-packing code in the AI domain diminishes.”

Nonetheless, it remains to be seen how these startups compete with CUDA. Intel and AMD have been trying for years, yet CUDA remains NVIDIA’s moat.

“All the maths libraries… and everything is encrypted. In fact, NVIDIA is moving its platform more and more proprietary every quarter. It’s not letting AMD and Intel look at that platform and copy it,” Witek said.