Graphics processing units (GPUs) may have become a coveted piece of hardware in the AI realm, yet their status as the most sought-after component may wane.

Unprecedented demand for GPUs has made NVIDIA a trillion-dollar company. However, even NVIDIA is starting to move away from what they originally created as a graphics chip, according to Keith Witek, chief operating officer at Tenstorrent.

“They’re even moving their architecture towards the heterogeneous compute, which looks a bit more like a tensor computer.

“So yes, I think it will trend in that direction. And even the guys in the graphics business of AI are realising the benefits of drifting their architecture in that direction,” Witek told AIM in an exclusive interaction.

He advocates for system-on-chip (SoC) architectures incorporating tensor units, graph units, and CPUs, asserting that heterogeneous computing utilising both CPUs and graph processors is the optimal approach for handling future workloads.

Recently, big tech companies like Microsoft and AWS, which are among NVIDIA’s biggest enterprise customers, have developed their own AI chips to reduce their dependency on NVIDIA’s GPUs and simultaneously reduce cost.

At the recently held Google I/O 2024, the tech giant announced Trillium TPUs, its six-generation silicon designed to handle AI workloads more efficiently.

Interestingly, chips designed by AWS, Microsoft and Google too have heterogeneous architectures. For example, Azure Maia AI Accelerator and Azure Cobalt CPU integrate different specialised compute engines and accelerators on the same chip.

Similarly, AWS Inferentia and Trainium also integrate different specialised compute engines and accelerators on the same chip.

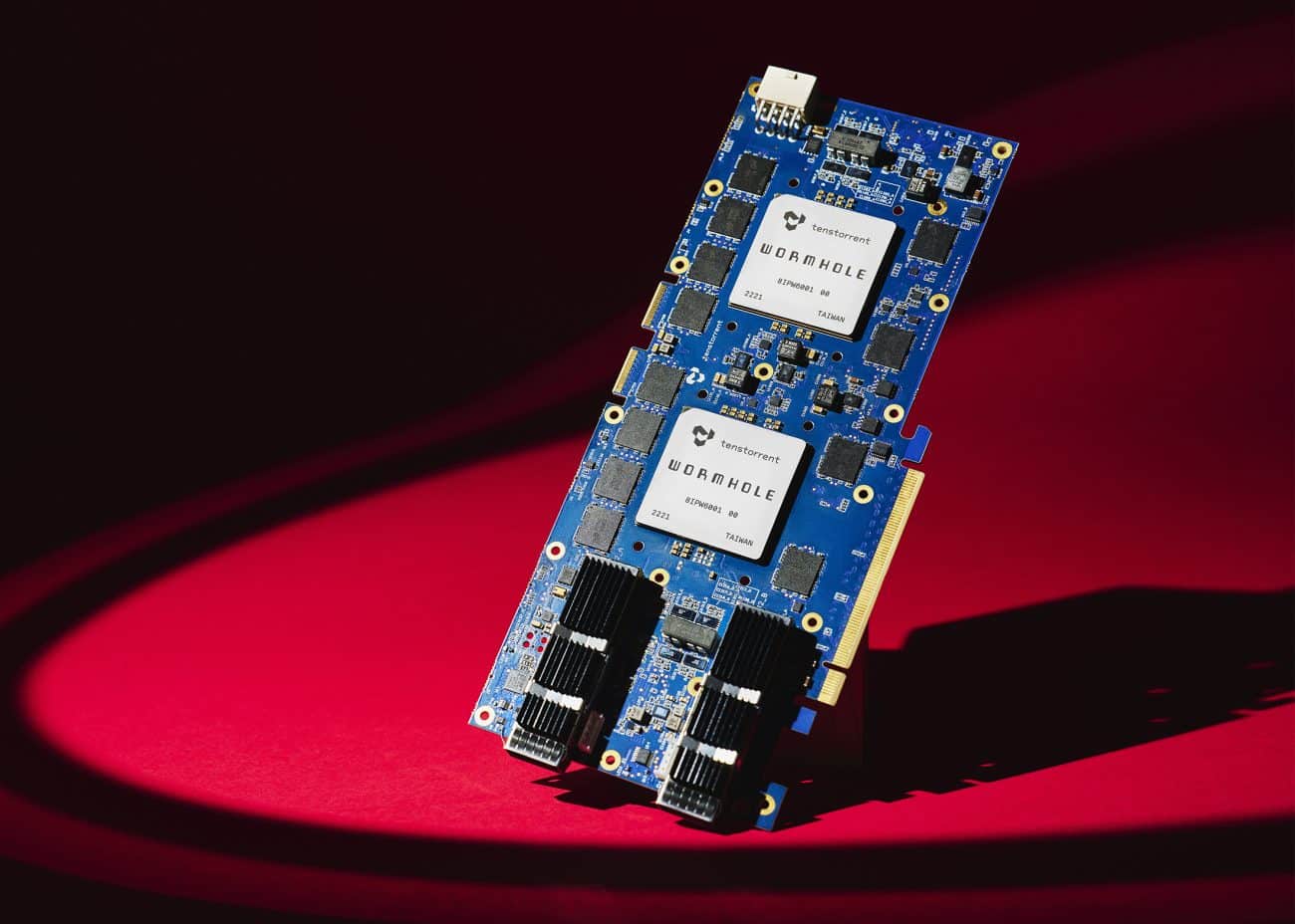

However, these chips are meant primarily for their internal use. Tenstorrent, on the other hand, sells its chips to enterprise customers, putting it in direct competition with NVIDIA.

“The company’s goal was to build boxes and compute platforms for high-end applications, like data centres and high-performance compute,” Witek said.

How Tenstorrent’s AI chips are better than NVIDIA GPU

Given their capacity to construct hardware solutions and provide two distinct software stacks, each optimised for their platform’s unique capabilities, users benefit from the flexibility to craft software and models to suit their needs.

Leveraging their proficiency in chip design and intellectual property (IP), Tenstorrent regards this as a holistic system-level strategy.

One of Tenstorrent’s biggest advantages is that its components architecturally pack more compute density into the box without creating much power and heat.

“So we have a very power-efficient compute, where we can put 32 compute engines in a box, the same size as NVIDIA puts eight in a box. With our higher compute density and similar power envelope, we outperform NVIDIA by multiples in terms of performance, output per watt, and output per dollar. Additionally, our software is open source and accessible to the community,” Witek said.

Tenstorrent’s AI chips eliminate the need for expensive interconnectors

Tenstorrent’s AI chips are designed to minimise the need to access DRAM compared to GPUs, which constantly access DRAM. Due to this distinction, NVIDIA requires costly silicon interposers like HBM memory chips.

SK Hynix, which makes HBM memory chips for NVIDIA, announced that they have already been sold out for the year. Moreover, Samsung has reported increased revenue growth resulting from a high demand for HBM memory chips.

These days, most data centre AI chips come equipped with HBM memory; however, Tenstorrent believes they can operate effectively without relying on such chips.

“We achieve comparable or superior performance using more economical GDDR6 or GDDR7 memory and organic interposers. Consequently, our chips are more cost-effective without compromising performance. This is because our architecture incorporates local cache and routing within the chip, reducing the necessity to access DRAM for internal connections,” Witek said.

Furthermore, Witek highlights that NVIDIA relies on expensive interconnects like Mellanox and NVLink to link boxes, racks, and containers within a data centre. In contrast, Tenstorrent can accomplish the same connectivity using Ethernet, which is both affordable and high-performing.

“So, why are people using NV Link and Mellanox? Well, they’re using it because NVIDIA can make a tremendous amount of money if they’re forced to use it. But architecturally, it’s not required that people spend that kind of money in their interconnection scheme in the data centre and we’re bringing that to the light of day with the architecture that we’re putting into the market,” Witek pointed out.

CUDA is a monster but has become unwieldy

Despite being a hardware company with a monopolistic hold in the GPU space, NVIDIA’s real competitive moat has been CUDA.

CUDA, which is an acronym for Compute Unified Device Architecture, is a software layer that gives direct access to the GPU’s virtual instruction set and parallel computational elements for the execution of compute kernels.

For quite some time, Intel and AMD have been trying to challenge CUDA’s dominance with their own software stack.

“CUDA has bloated over the years into a pretty large monster that can do a lot of different things, but it’s become very unwieldy,” Witek said.

“All the math libraries… and everything is encrypted, in fact, and NVIDIA is moving their platform more and more proprietary every quarter. They’re not letting AMD and Intel look at that platform and copy it. Programmers are avoiding CUDA whenever possible and writing a lot of code in C++ and other languages,” he continued.

On the contrary, Tenstorrent has open-sourced its software stacks. They offer a platform named Metalium, similar to CUDA but less cumbersome and more user-friendly. On Metalium, users can write algorithms and programme models directly to the hardware, bypassing layers of abstraction.

However, proficiency in hardware architecture is essential, as errors may require manual correction by the user for proper functionality.

“It’s very much like CUDA, but we give you 100% open-source software and 100% open-source models. You can get on Hugging Face, download the model and run it; we make it very easy for you to do that,” Witek said.

Tenstorrent’s second software, called BUDA, represents the envisioned future utopia, according to Witek.

“Eventually, as compilers become more sophisticated and AI hardware stabilises, reaching a point where they can compile code with 90% efficiency, the need for hand-packing code in the AI domain diminishes.

“Although we haven’t reached that crossover point yet, many anticipate it within the next few years. Hence, we are continually enhancing BUDA’s efficiency to prepare for that eventual transition,” he concluded.