Despite having many important applications, artificial neural networks often face a number of problems. One such problem is the Vanishing Gradient Problem. When the neural networks are trained with gradient-based learning methods and backpropagation, they encounter the vanishing gradient problem. In this problem, at the time of training, the gradient starts getting smaller in size which prevents the neural networks from getting trained by not letting the network weights be changed. In this article, we will try to understand the vanishing gradient problem in detail along with the approach to resolve this problem. The major points to be covered in this article are listed below.

Let us begin with understanding the vanishing gradient problem.

What is a Vanishing Gradient Problem?

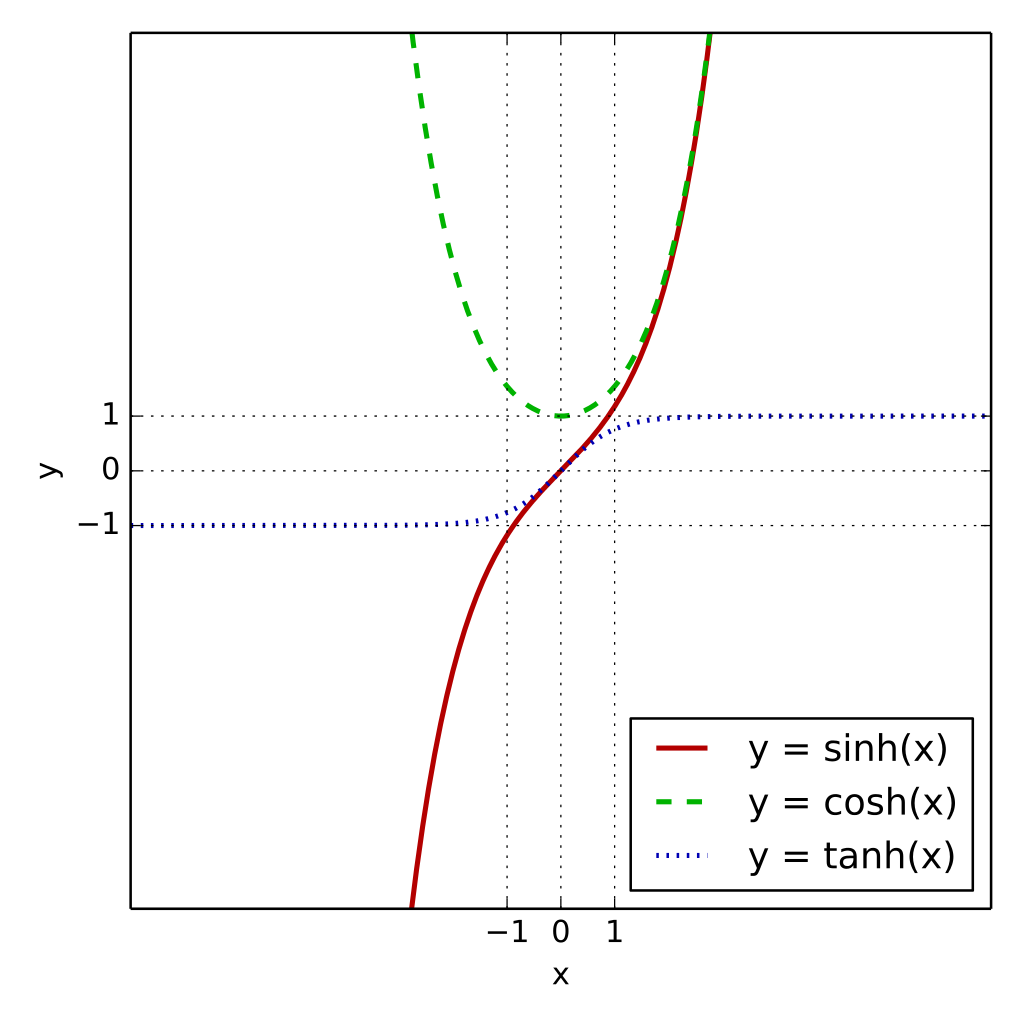

As we know, using more layers in any neural network causes more activation function with them and as we increase the number or activation function increases the gradient of loss function leads to zero. Let’s take a simple example of any neural network where we are using any layer in a layered neural network with hyperbolic tangent function, and have gradients in the range between 0 to 1. This function will be multiplying n of these(0-1) small numbers to compute gradients of the preceding layers, meaning that the gradient decreases exponentially with n. So the function gives the output between the range of 0 to 1. This means that the output from the tanh activation function does not depend on the size of input data. The image represents a hyperbolic tangent activation function.

So while using the function we can say that a large change in the input space will be very small in the output. The vanishing gradients problem is one example of the unstable behaviour of a multilayer neural network. Networks are unable to backpropagate the gradient information to the input layers of the model.

In a multi-layer network, gradients for deeper layers are calculated as products of many gradients (of activation functions). When those gradients are small or zero, they will easily vanish. (On the other hand, when they’re bigger than 1, it will possibly explode.) So it becomes very hard to calculate and update.

The VGP occurs when the elements of the gradient (the partial derivatives with respect to the parameters of the NN) become exponentially small so that the update of the parameters with the gradient becomes almost insignificant

Recognizing The Vanishing Gradients

- We can detect it by analysing the kernel weight distribution. There is a vanishing gradient if the weights are falling regularly near zero.

- This problem can be recognised when a neural network is very slow in training.

- Neural networks are not well trained with the data which we are using or showing unusual behaviour regarding results.

How to Resolve the Vanishing Gradient Problem?

There are various methods that help in overcoming the vanishing gradient problems:

- Multi-level hierarchy

- The long short term memory

- Residual neural network

- ReLU

Let us understand these approaches one by one.

Multi-Level Hierarchy

It is one of the most basic and older solutions for a multilayer neural network model facing the vanishing gradient problem. It is simply a method that follows the procedure of training one level at a time and fine-tuning the level by backpropagation. So that every layer learns a compressed observation which goes ahead for the next level.

Long Short-Term Memory(LSTM)

So as of now, we have seen there are two major factors that affect the gradient size – weights and their derivatives of the activation function. A simple LSTM helps the gradient size to remain constant. The activation function we use in the LSTM often works as an identity function which is a derivative of 1. So in gradient backpropagation, the size of the gradient does not vanish.

Let’s understand the image below.

According to the above image, the effective weight of the gradient is equal to the forget gate activation. So, if the forget gate is on (activation close to 1.0), then the gradient does not vanish. That is why LSTM is one of the best options to deal with long-range dependencies. More powerful in the recurrent neural network.

Residual Neural Network

The residual neural networks were not introduced to solve the vanishing gradient problem but they have special connections which makes it different from the other neural networks that are a residual connection, residual connection in the neural network make the model learn well and the batch normalization feature makes sure that the gradients will not be vanishing. These batch normalization features are obtained by the skip connection.

The skip or bypass connection is useful in any network to bypass the data from a few layers. Basically, it allows information to skip the layers. Using these connections, information can be transferred from layer n to layer n+t. Here to perform this thing we need to connect the activation function of layer n to the activation function of n+t. This causes the gradient to pass between the layers without any modification in size.

As we have discussed, activation functions keep multiplying the finite small numbers to the weights. For example, ½*½=¼ and then ½*¼=⅛ and so on. Here in the example, we can say the number of layers increases the chances of VGP. The skip of layers will help the weight of information pass from layers without vanishing.

Therefore, skip connections can mitigate the VGP, and so they can be used to train deeper NNs.

The above image represents the architecture of the ResNet. ResNet stands for residual network.

Rectified Linear Unit (ReLU) Activation Function

ReLU is an activation function more deeply it is a linear activation function. Which is like a sigmoid and tanh activation function but better than them. The basic function for ReLU conversion of input can be represented as

f(x) = max(0,x)

Where the ReLU function is its derivatives are constant. If in input the function gets a negative it returns 0 or if the input is greater than 0 it returns a similar value back. That is why we can say the output from the ReLU has ranged between 0 to infinity.\

The above image represents the output of the ReLU function. Now let’s see how it helps in vanishing gradient problems.

When we talk about the backpropagation procedure, whichever gradient gets updated by multiplying with the multiple factors. As the information goes towards the start of the network the more factors are multiplied together to update the gradient. Many of these factors can be considered as the activation function. The activation function derivatives can be considered as a kind of tuning parameter, designed to get the accurate gradient descent.

In the above we have seen that if we multiply a bunch of numbers with a value less than 1, they will start to tend to zero hence the gradient we get from the output layer will be negligible. In this scenario, if we multiply a number with a greater value than 1 they will tend towards infinity. So where the values are less than one we will get a slope that will be less than one and here comes the vanishing gradient problem.

But if somehow we get the contribution of these derivatives of the activation function as 1 we can resolve the gradient vanishing problem of the model. Basically, in this situation, we can say that every gradient update is contributing to the model from input to the output or model. Here for this ReLU comes in the picture which has only two gradients 0 or 1.

- Gradient one when the output of the function is > 0.

- Gradient zero when the output of the function is < 0.

Hence these bunch of derivatives give either 0 or either 1 when multiplying together. The backpropagation equation will have only two options of either being 1 or being 0. The update is either nothing or takes contributions entirely from the other weights and biases.

Final Words

This article is aimed to discuss the issue that we can face while training the neural network in following the backpropagation procedure. We have seen how the problem occurs when the weights recurring are very less and tend towards zero. This kind of issue often occurs with the network with many numbers of layers, it barely occurs when the network is shallow. So if the long time taking problem is there but the network has low layers we should check for the computer configuration and if the 25% of your kernel weights are falling down to zero it should not be considered as the vanishing gradient problem.