|

Listen to this story

|

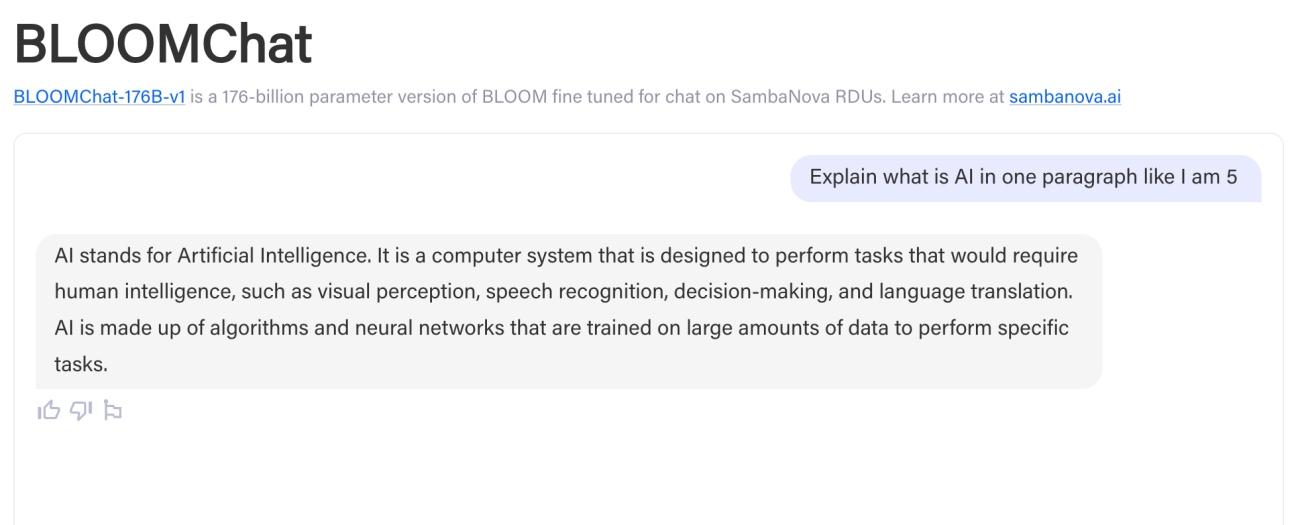

Just when we thought that BLOOM was not going anywhere. Palo Alto-based SambaNova, in collaboration with Together launched BLOOMChat, an open-source and multilingual chatbot which is built on top of BLOOM-176B, the multilingual LLM pre-trained by BigScience Group.

Built for researcher and commercial use, BLOOMChat can be used for various purposes, as it is offered under a modified version of Apache 2.0 licence that incorporates RAIL’s usage restrictions derived from BLOOM.

Click here to check out BLOOMChat.

The blog explains that to make this model, SambaNova fine-tuned BLOOM-176B on LAION’s OIG dataset from OpenChatKit, Dolly 2.o, and OASST1.

BLOOM is one of the largest open source multilingual models which constraints 46 languages and by fine tuning it on open conversation and alignment datasets, the company was able to build it for user interactive chatbot.

The chatbot has been developed through the utilisation of SambaNova RDUs (Reconfigurable Dataflow Units). Its training has resulted in impressive performance achievements, with a win-rate of 45.25% compared to GPT-4’s 54.75% in a human preference study conducted across 6 languages.

SambaNova said that the need for a multilingual LLM has been there all this while since most of the new models like OpenChatKit, Dolly 2.0, LLaMA-Adapter-V2-65B, and Vicuna have been only focused on English. The model is still little behind GPT-4 on performance, and still shows competitive results.

Moreover, BLOOMChat has showcased strong capabilities in WMT translation tasks, outperforming other BLOOM variants as well as mainstream open-source chat models, thus establishing its prominence in this domain.

SambaNova also said that the model is preferred 66% of the time compared to mainstream open-source chat LLMs.

The company admits that there still are several limitations in the model like hallucination, code switching, repetition, weak maths, and prone to toxicity.

BLOOM-ing Slowly, but Surely

Unleashing the power of open-source, BLOOM was developed by a group of 1000 researchers from 60+ countries and 250+ institutions. The 176 billion parameters LLM can generate text in 46 natural language and dialects and 13 programming languages. The work, however, has been slow compared to other open source models mushrooming in the space.

Like for instance Meta’s LLaMA has been making noise in the open source ecosystem, eversince its leak on GitHub a few months ago, where developers have been experimenting with it to reduce the memory requirements for the model and more. Soon came Vicuna, Alpaca and others, which have been instrumental in significantly lowering training and inference costs, in comparison to closed-door, multimillion-dollar backed companies, training similar models.

Recently, Hugging Face also launched HuggingChat. Just like BLOOMChat, this chatbot too provides various functionalities and integrations catering to both developers and researchers, making OpenAI count its ChatGPT days. Read: OpenAI has Stopped Caring About ‘Open AI’ Altogether.