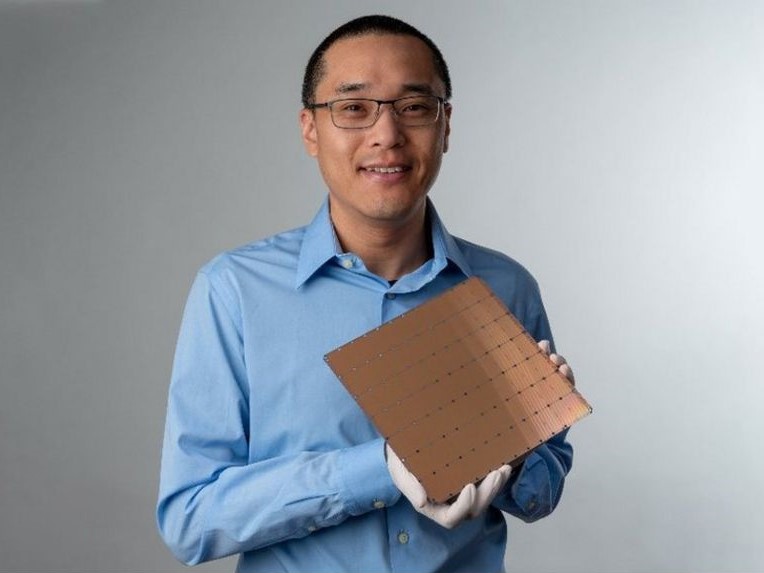

Cerebras Systems is known for its avant-garde chip designs. The chipmaker has once again managed to turn heads with the announcement of Wafer Scale Engine 2 (WSE 2), the world’s largest chip, based on 7nm process node.

Measuring roughly around 46,225 mm square (50 times the size of the largest GPU) and a processor with almost one million cores (850,000), WSE2 is a 123x improvement over the Ampere A100, NVIDIA’s largest GPU with 54 billion transistors and 7,433 cores.

When the semiconductor industry is striving to build smaller components, the introduction of the Wafer Scale Engine (WSE 1) caught everyone off-guard. Cerebras Systems has designed and manufactured the largest chip exclusively for optimised deep learning. Deep learning is one of the most computationally intensive workloads. Moreover, DL is quite time-consuming as it uses multiple layer loops to extract higher-level features from the raw input. The only way to reduce training time is to cut down the time taken for inputs to pass through multiple layer loops, which can be achieved by increasing the number of cores to increase calculation speed. The Wafer Scale Engine was built to address this need.

Two years ago, Cerebras challenged Moore’s Law with the Cerebras Wafer Scale Engine (WSE). The previous generation of WSE chip with 1.2 trillion transistors trounced Moore’s law by a huge margin. Moore’s Law states that the number of transistors on a microchip doubles every two years, while the cost of computers is halved.

So, what’s the catch?

According to Our World Data, till 2019, the next largest transistor count for a microprocessor is AMD’s Epyc Rome processor with 39.54 billion transistors. WSE might as well be the tipping point for the semiconductor industry bringing forth a new era of AI chips which quadruple the number of transistors every year. The newly developed AI chip WSE 2 with 2.6 trillion transistors is proof of that possibility.

So, what was the purpose of introducing a big chip? Was it just to prove Moore’s Law was wrong? The answer is much more practical. According to Andre Feldman, co-founder and CEO of Cerebras Systems, the logic behind the size of WSE is quite simple. Usually, a large amount of data is required to accelerate AI, and the processing speed is crucial.

Size has always been a limitation in machine learning processors, but everything changed when Cerebras introduced its revolutionary design. By building a wafer-scale chip on a single piece of silicon, Cerebras avoided all the performance issues like slow off-chip communication, low memory bandwidth, distant memory etc. Since memory is a crucial component, its placement affects the processor drastically; the closer it is, the faster the calculations and consumes less power while moving data. High-performance deep learning requires each core to operate at optimum, which requires close collaboration and proximity between the core and memory.

In other words, the WSE achieved cluster-scale performance without the penalties of building large clusters and the limitations of distributed training. The Cerebras WSE-2 currently powers the CS-2 system. The new processor has 2.6 trillion transistors with 850,000 AI cores; all packed onto a single silicon wafer to deliver leading AI compute density at unprecedented low latencies. It accelerated deep learning to compute and reduced the time it took to train models, enabling the task to be completed in a few minutes. Cerebras WSE-2 has pushed past the barriers in AI chip development, ushering in a change in AI chips design and performance.