Deep learning is a technique that is obtaining a foothold beyond multiple disciplines – enabling self-driving cars, predictive fault monitoring of jet engines, and time series forecasting in the economic markets and other use cases.

In MATLAB it takes fewer lines of code and builds a machine learning or deep learning model, without needing to be a specialist in the techniques. MATLAB provides the ideal environment for deep learning, through model training and deployment.

- Why You Should Learn Matlab For Data Science

- Comparing Different Programming Languages For Machine Learning

- MathWorks In Collaboration With NVIDIA’s DLI Offers New Deep Learning With MATLAB Course

- MATLAB Expo 2015 – April, Bangalore & Pune

- MATLAB In Deep Learning, Analytics Space; Announces R2017B, Massive Update In September

In this article, we see how MATLAB is gaining in popularity for deep learning:

Why Matlab

MATLAB programming platform has numerous advantages over other techniques or languages. The fundamental structure has a basic data element in a matrix. A simple integer is recognised as a matrix of one row and one column. Different mathematical methods that work on arrays or matrices are built into the Matlab environment. For instance, cross-products, dot-products, determinants, inverse matrices. Vectorized operations such as adding two arrays together need only one command, instead of a for or while loop. The graphical output is optimized for communication. Users can plot their data very simply, and then modify colours, sizes, scales, etc, by handling the graphical interactive tools. Matlab’s functionality can be considerably expanded by the addition of toolboxes. These are sets of specific functions that provided more specialized functionality. These features make the programming language very effective for implementing deep learning.

The following are the fundamental features of MATLAB:

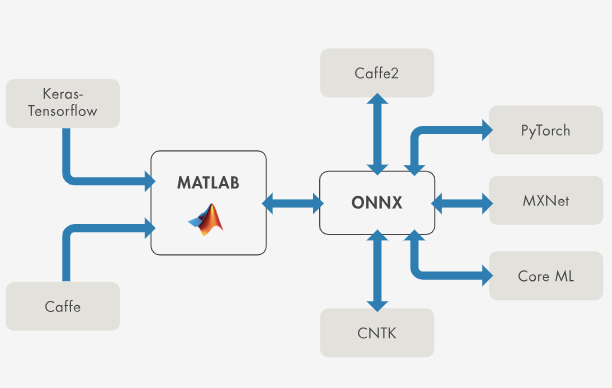

Interoperability

MATLAB supports interoperability with other open-source deep learning frameworks such as ONNX. Users can choose MATLAB for locating capabilities and prebuilt purposes and applications which are not available in other programming languages.

Scope For Preprocessing

Matlab gives scope for preprocessing datasets actively with domain-specific apps for audio, video, and image data. Users can visualize, check, and mend problems before training the Deep Network Designer app to build complex network architectures or modify trained networks for transfer learning.

Multi-Program Deployment

Matlab can use deep learning models everywhere including CUDA, C code, enterprise systems, or the cloud. It gives a great performance where a user can produce code that supports optimized libraries like Intel(MKL-DNN), NVIDIA (TensorRT, cuDNN), and ARM (ARM Compute Library) to build deployable patterns with high-performance inference activity.

Deep Learn Toolbox

Deep Learning Toolbox implements a framework for composing and performing deep neural networks with algorithms, trained models, and applications. A user can apply convolutional neural networks and long short-term memory (LSTM) networks to provide classification and regression on image, time-series, and text data. Apps and plots support users to visualize activations, edit network architectures, and monitor preparation progress.

For modest training sets, a user can operate transfer learning with trained deep network models and models implied from TensorFlow-Keras and Caffe.

For further training on large datasets, users can assign computations and data beyond multicore processors and GPUs on the desktop or scale up to clusters and clouds, including Amazon EC2® P2, P3, and G3 GPU instances.

MatConvNet

MatConvNet is a process of Convolutional Neural Networks (CNNs) execution for MATLAB. The toolbox is originated with an emphasis on simplicity and flexibility. The library is the building block of CNNs as easy-to-use MATLAB functions, providing methods for calculating linear convolutions with filter banks, feature pooling, and many more. The MatConvNet allows for fast prototyping of distinct CNN architectures, and at the same time, it supports efficient computation on CPU and GPU providing to exercise complex models on large datasets.

Cuda-Convnet

This library is a secured C++/CUDA implementation of convolutional neural networks. It can display optional layer connectivity and network intensity. Any marked acyclic graph of layers is accepted. Training is done using the back-propagation algorithm.

Backpropagation is a method implemented in ANNs to calculate a gradient that is needed in the calculation of the weights to be used in the network. Backpropagation is stenotype for the backward propagation of errors since an error is calculated at the output and distributed backwards throughout the network’s layers It is commonly used to train deep neural networks.

Cuda uses Backpropagation as it is a generalization of the delta rule to multi-layered feedforward networks, which were made possible by applying the chain rule to iteratively compute gradients for each layer. It is intimately associated with the Gauss-Newton algorithm and is part of advancing research in neural backpropagation.